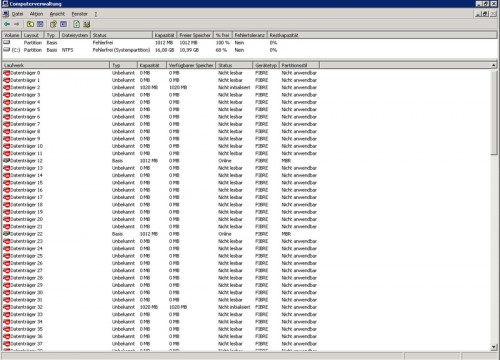

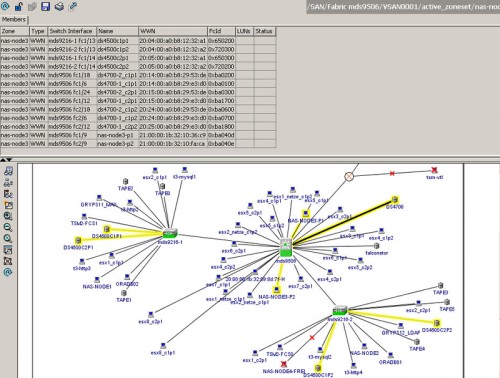

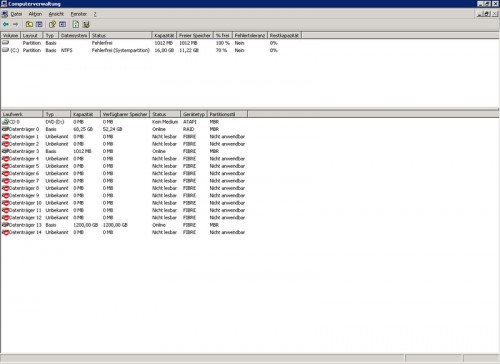

Well, once again the Microsoft Cluster on VMware bit my ass … As you might know, MSCS on VMware is a particular kind of pain, with each upgrade you end up with the same problem over and over again (SCSI reservations on the RDM-LUNs being one, and the passive node not booting being the other).

So I opened up another support case with VMware, and the responded like this:

Please see this kb entry: http://kb.vmware.com/kb/1016106

This doesn’t completely fit my case, but since the only active cluster-node failed yesterday evening (it’s only our internal file-share server, thus no worries), I thought I’d try to set the options.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

param( [string] $vCenter, [string] $cluster, [string] $device_identifier ) # Add the VI-Snapin if it isn't loaded already if ( (Get-PSSnapin -Name "VMware.VimAutomation.Core" -ErrorAction SilentlyContinue) -eq $null ) { Add-PSSnapin -Name "VMware.VimAutomation.Core" } if ( !($vCenter) -or !($cluster) -or !($device_identifier) ) { Write-Host `n "cluster-fix-mscs-lun: <vcenter> <cluster> <device_identifier>" `n Write-Host "cluster-fix-mscs-lun sets the specified device to be perennially reserved" Write-Host " thus a) the affected host(s) boot faster" Write-Host " and b) the affected passive nodes actually boots up" `n exit 1 } # Turn off Errors $ErrorActionPreference = "silentlycontinue" Connect-VIServer -Server $vCenter $VMhosts = Get-Cluster $cluster | Get-VMHost foreach ($vmhost in $VMhosts) { # Create the ESXCLI command line $esxcli = Get-ESXCLI -VMHost $vmhost # List settings for device $esxcli.storage.core.device.list($device_identifier) # Set the specified LUN to PerenniallyReserved $esxcli.storage.core.device.setconfig($false, $device_identifier, $true) # Print verification for the above action $esxcli.storage.core.device.list($device_identifier) } Disconnect-VIServer -server $vCenter -Confirm:$false |

And guess what ? My damn cluster works again 🙂