As I previously said, I was writing my own OCF resource agent for IBM’s Tivoli Storage Manager Server. And I just finished it yesterday evening (it took me about two hours to write this post).

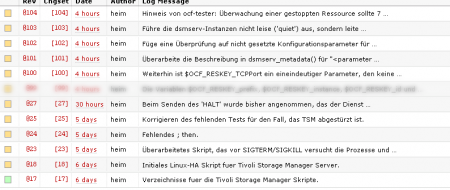

Only took me about four work days (that is roughly four hours each, which weren’t recorded in that subversion repository) plus most of this week at home (which is 10 hours a day) and about one hundred subversion revisions. The good part about it is, that it actually just works 😀 (I was amazed on how good actually). Now you’re gonna say, “but Christian, why didn’t you use the included Init-Script and just fix it up, so it is actually compilant to the LSB Standard ?”

The answer is rather simple: Yeah I could have done that, but you also know that wouldn’t have been fun. Life is all about learning, and learn something I did (even if I hit the head against the wall from time to time 😉 during those few days) … There’s still one or two things I might want to add/change in the future (that is maybe next week), like

- adding support for monitor depth by querying the dsmserv instance via dsmadmc (if you read through the resource agent, I already use it for the shutdown/pre-shutdown stuff)

- I still have to properly test it (like Alan Robertson mentioned in his one hour thirty talk on Linux-HA 2.0 and on his slides, Page 100-102) in a pre-production environment

- I’m probably configure the IBM RSA to act as a stonith device (shoot the other node in the head) – just for the case one of them ever gets stuck in a case, where the box is still up, but doesn’t react to any requests anymore