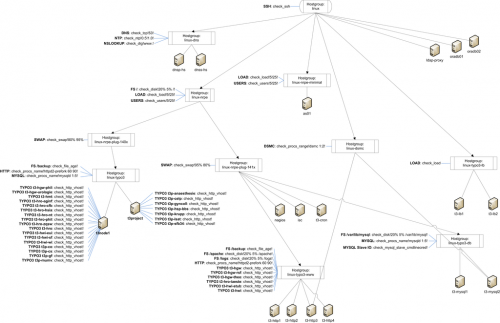

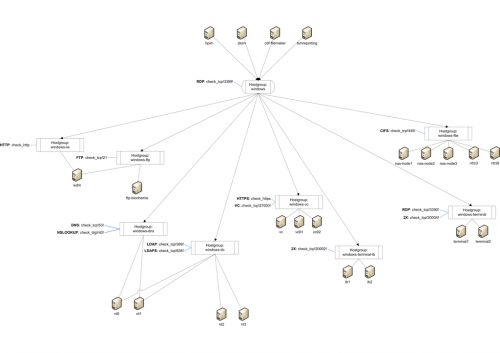

Well, most of you already know that I’m a Nagios fanatic. I like to watch as many aspects as I possibly can. So, yesterday I started figuring out ways to watch our different cluster groups (housing a bunch — try above 20.000 — of file shares).

Now, my first tries failed horribly. I brought down a complete cluster group, resulting in a major annoyance. Now, today I went at it a bit smarter 😛 I cloned myself two VM’s off my Windows Server 2003 Enterprise R2 template, created a new cluster.

After that, I tried it on the test cluster again, same result. The resource is successfully created, but once I try to take it online, it breaks and moves the whole cluster group to the other node (as cyclic moving between the cluster nodes with no end).

After that, I figured something has to be wrong with the command I’m trying to use, the one as instructed by the NSClient++ wiki. I then tried the command on the command line, but as soon as hitting <TAB> (oooold bash habit 😛 ), it completed the path, but put quotes around it … Don’t ask me.

If I try the path without the quotes, no-joy at all. Once you put quotes around it, everything becomes honky-dory and the resource comes online without the slightest trouble!

Hint to self: When creating a NSClient++ cluster resource (or any application resource using a command that needs switches for that matter), use a quoted command line along the lines of this:

|

1 |

"Q:_nsclientnsclient.exe" /test |