OK, I’m sitting now again in train (hrm, I get the feeling I’ve done that already in the last few days – oh wait, I was doing that just on Monday) this time to Berlin.

My boss ordered me to attend a workshop covering the implementation of Shibboleth (for those of you, who can’t associate anything with that term – it’s an implementation for single sign-on, also covering distributed authorization and authentication) somewhere in Berlin Spandau (Evangelisches Johannesstift Berlin).

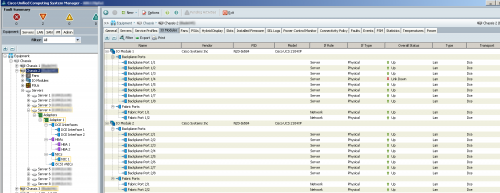

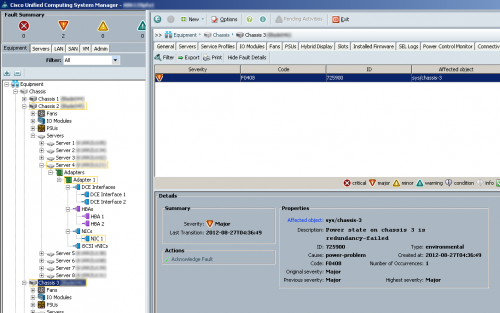

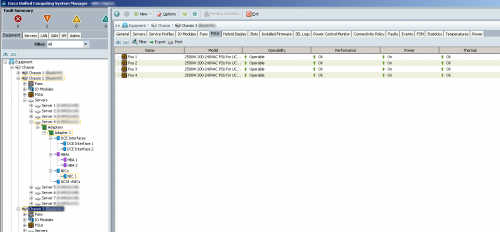

Yesterday was quite amazing workwise, we lifted the 75kg Blade Chassis into the rack (*yuck* there was a time I was completely against Dell stuff, but recently that has changed), plugged all four C22 plugs into the rack’s PDU’s and into the chassis, patched the management interface (which is *waaay* to slow for a dedicated management daughter board) and for the first time started the chassis. *ugh* That scared me .. that wasn’t noise like a xSeries or any other rack-based server we have around, more like a starting airplane. You can literally stand in behind of the chassis, and get your hairs dried (if you need to). So I looked at the blades together with my co-worker and we figured, that they don’t have any coolers anymore, they are just using the cooling the chassis provides.

Another surprise awaited us, when we thought, we could use the integrated switch to provide network for both integrated network cards (Broadcome NetExtreme II). *sigh* You need two seperate switches to serve two network cards, even if you only have two blades in the chassis (which provides space for 10 blades). *sigh* That really sucks, but its the same with the FC stuff …

So, we are waiting yet again for Dell to make us an offer, and on top of that, the sales representative doesn’t have the slightest idea if the FC passthrough module includes SFP’s or not … *yuck*

I must say, I’m impressed by the Dell hardware, but I’m really disappointed by their sales representative.