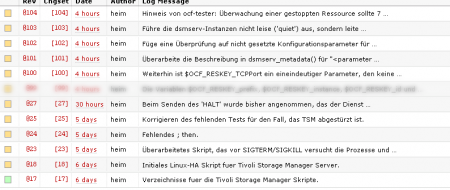

As I previously said, I was writing my own OCF resource agent for IBM’s Tivoli Storage Manager Server. And I just finished it yesterday evening (it took me about two hours to write this post).

Only took me about four work days (that is roughly four hours each, which weren’t recorded in that subversion repository) plus most of this week at home (which is 10 hours a day) and about one hundred subversion revisions. The good part about it is, that it actually just works 😀 (I was amazed on how good actually). Now you’re gonna say, “but Christian, why didn’t you use the included Init-Script and just fix it up, so it is actually compilant to the LSB Standard ?”

The answer is rather simple: Yeah I could have done that, but you also know that wouldn’t have been fun. Life is all about learning, and learn something I did (even if I hit the head against the wall from time to time 😉 during those few days) … There’s still one or two things I might want to add/change in the future (that is maybe next week), like

- adding support for monitor depth by querying the dsmserv instance via dsmadmc (if you read through the resource agent, I already use it for the shutdown/pre-shutdown stuff)

- I still have to properly test it (like Alan Robertson mentioned in his one hour thirty talk on Linux-HA 2.0 and on his slides, Page 100-102) in a pre-production environment

- I’m probably configure the IBM RSA to act as a stonith device (shoot the other node in the head) – just for the case one of them ever gets stuck in a case, where the box is still up, but doesn’t react to any requests anymore

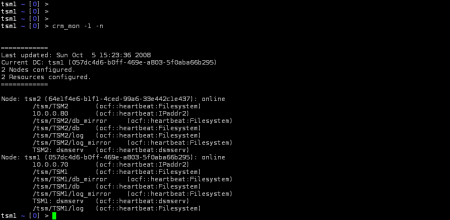

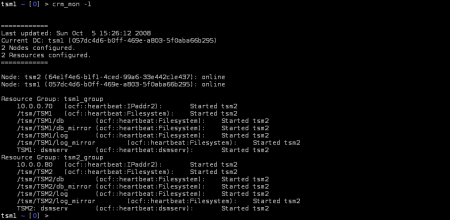

So here’s a small (well the picture is rather big 😛 duuh) screen capture of a grouped resource (basically, IP + Filesystem mounts + dsmserv instance) migrating to a different physical host (both share some shared storage in this example – I used two VM’s).

First the VM configuration. It looks a bit messy, as I needed two database volumes for Tivoli, two recovery log volumes for Tivoli, one Active Data storage pool for Tivoli and one volume for the configurations of the Tivoli Storage Manager server itself. I could have done it with only 5 disks each. But I wanted to test how Linux-HA handles the migration with resources, as well as I wanted to test how Tivoli is handling it.

Now, the first hard disk is where the system (and all the other stuff, like heartbeat …) is installed. It got two network cards, one for cluster link and the other for the serviceing to the public network. It also got a second controller, simply due to the nature VMware handles disk sharing. This controller is set to SCSI Bus Sharing = Physical (or scsi?.sharedBus = “physical” if you rather want the vmx term), to allow it to share hard disks with other virtual machines.

I then started installing stuff (needed heartbeat-2.1.3, heartbeat-pils-2.1.3 and heartbeat-stonith-2.1.3 – which sadly pulls in GNOME due to “hb_gui“), imported the configurations (they are in my earlier post about Setting up Linux-HA) like this:

|

1 2 |

cibadmin -o resources -C -x resources.xml cibadmin -o constraints -C -x constraints.xml |

And that’s basically it. The cluster is up and running (even if I set the dsmserv to stopped for the time being since I wasn’t sure whether or not the resource agent would work). Now, after we’re done with setting up the cluster, it basically looks as showed on the picture.

The good part about the constraints is though, they are the “good kind” of resource constraints. They basically say “run the resource group tsm1_group on the host tsm1, but if you really have to, starting it on the host tsm2 is also ok”. If you looked closely at my resources.xml, you might have noticed that I specified name=”target_role” value=”stopped” for each dsmserv resource.

Well I did this on purpose, since I didn’t knew whether or not the resource agent is actually gonna work. After I knew (I played with it for a while, remember ?), I started the resource, by issuing this:

|

1 2 3 4 5 6 |

cibadmin -o resources -M -X '<nvpair name="target_role" id="dsmserv_tsm1_status" value="started"/>' cibadmin -o resources -M -X '<nvpair name="target_role" id="dsmserv_tsm2_status" value="started"/>' |

Now guess, what happens if you start rebooting one box ? Exactly. Linux-HA figures after 30 seconds that the box is dead (which it is, at least from the service’s point of view) and simply starts it on the still “living” cluster node. Now, lets play with those resource groups. By simply issuing

|

1 |

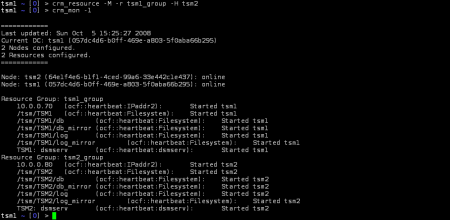

crm_resource -M -r tsm1_group -H tsm2 |

we’re gonna tell Linux-HA to migrate the resource group to the second host, and keep it pinned there. As you can see on the left, it actually takes a bit until Linux-HA is starting to enforce the policy, which crm_resource just created for us.

After a brief moment (that is about thirty seconds – due to the ocf resource agent sleeping for 2*5s), the resources are shutting down on the first host.

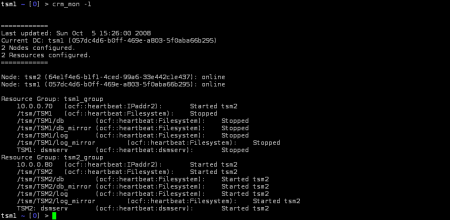

Now, after thinking about it, I could have done this differently. As you can see, Linux-HA is first shutting down the whole resource group on one host, before it starts bringing any resources (because it treats the resource group as a single resource itself). This implies a longer outage than if I would have done it with before/after constraints. I still might do that, but for now the resource group is ok.

As you can see, about fifty seconds after I ordered Linux-HA to migrate the resource group tsm1_group to the second host, it finally enforced policy and the resources are up and running again. Now, sadly Linux-HA doesn’t do something like vMotion does (copying the memory map and stuff), which would safe us the trouble of doing the shutdown in the first place. I know openVZ does support that kind of stuff, but I guess that’s just day-dreaming for now. Would make one hell of a solution 😛

Oh yeah, and if you’re as much annoyed as me of heartbeat (or rather logd) polluting your messages file, and you are a lucky beggar and are running syslog-ng, simple put this into your /etc/syslog-ng/syslog-ng.conf (or rather /etc/syslog-ng/syslog-ng.conf.in on SLES10 and running SuSEconfig –module syslog-ng):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

# The main filter for all Heartbeat related programs filter f_ha { program("^Filesystem$") or program("^IPaddr2$") or program("^dsmserv$") or program("^attrd$") or program("^attrd_updater$") or program("^ccm$") or program("^ccm_tool$") or program("^cib$") or program("^cibadmin$") or program("^cluster$") or program("^cl_status$") or program("^crmadmin$") or program("^crmd$") or program("^crm_attribute$") or program("^crm_diff$") or program("^crm_failcount$") or program("^crm_master$") or program("^crm_resource$") or program("^crm_standby$") or program("^crm_uuid$") or program("^crm_verify$") or program("^haclient$") or program("^heartbeat$") or program("^ipfail$") or program("^logd$") or program("^lrmd$") or program("^mgmtd$") or program("^pengine$") or program("^stonithd$") or program("^tengine$"); }; filter f_ha_debug { level(debug); }; filter f_ha_main { not level(debug); }; # # heartbeat messages in separate file: # destination ha_main { file("/var/log/heartbeat/ha-log" owner(root) group(root) create_dirs(yes) dir_group(root) dir_owner(root) dir_perm(0700)); }; destination ha_debug { file("/var/log/heartbeat/ha-debug" owner(root) group(root) create_dirs(yes) dir_group(root) dir_owner(root) dir_perm(0700)); }; log { source(src); filter(f_ha); filter(f_ha_main); destination(ha_main); }; log { source(src); filter(f_ha); filter(f_ha_debug); destination(ha_debug); }; |

That should get you a directory in /var/log named heartbeat with two logfiles, the main log “ha-log” which holds the important stuff and the debug log “ha-debug“. You also should put a logrotate rule somewhere, as those two files grow big rather fast.

One thought to “Linux-HA and Tivoli Storage Manager (Finito!)”