Well, I’m in a situation, where I need to move all volumes from one controller to two others. So I looked at the ways I had available:

- Freshly implementing everything: No option at all!

- vol copy: Is rather slow, thus no option

- ndmpcopy: That’s exactly what I needed!

ndmpcopy is a great way to copy over a whole volume including it’s files (thus FCP luns) to another volume/controller.

First I threw in a crossover cable, since at around 6 PM our backup system starts it’s daily run, and everything else running via IP in between 6 PM and 6 AM is seriously impaired by this. Configured the additional ports on all three controllers (picked a private, not-routed range just in case) and then kicked of a simple bash script that ran the following:

|

|

ssh fas03 ndmpcopy -sa ndmp:ndmppass -da ndmp:ndmppass 192.168.2.30:/vol/vol_xen_boot 192.168.2.40:/vol/vol_xen_boot |

Now, that in itself worked like a charm as you can see from the output below.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

Ndmpcopy: Starting copy [ 32 ] ... Ndmpcopy: 192.168.2.30: Notify: Connection established Ndmpcopy: 192.168.2.40: Notify: Connection established Ndmpcopy: 192.168.2.30: Connect: Authentication successful Ndmpcopy: 192.168.2.40: Connect: Authentication successful Ndmpcopy: 192.168.2.30: Log: DUMP: creating "/vol/vol_xen_boot/../snapshot_for_backup.17" snapshot. Ndmpcopy: 192.168.2.30: Log: DUMP: Using Full Volume Dump Ndmpcopy: 192.168.2.30: Log: DUMP: Date of this level 0 dump: Fri Oct 5 23:41:47 2012. Ndmpcopy: 192.168.2.30: Log: DUMP: Date of last level 0 dump: the epoch. Ndmpcopy: 192.168.2.30: Log: DUMP: Dumping /vol/vol_xen_boot to NDMP connection Ndmpcopy: 192.168.2.30: Log: DUMP: mapping (Pass I)[regular files] Ndmpcopy: 192.168.2.30: Log: DUMP: mapping (Pass II)[directories] Ndmpcopy: 192.168.2.30: Log: DUMP: estimated 14916204 KB. Ndmpcopy: 192.168.2.30: Log: DUMP: dumping (Pass III) [directories] Ndmpcopy: 192.168.2.30: Log: DUMP: dumping (Pass IV) [regular files] Ndmpcopy: 192.168.2.40: Log: RESTORE: Fri Oct 5 23:41:53 2012: Begin level 0 restore Ndmpcopy: 192.168.2.40: Log: RESTORE: Fri Oct 5 23:41:53 2012: Reading directories from the backup Ndmpcopy: 192.168.2.40: Log: RESTORE: Fri Oct 5 23:41:55 2012: Creating files and directories. Ndmpcopy: 192.168.2.40: Log: RESTORE: Fri Oct 5 23:41:55 2012: Writing data to files. Ndmpcopy: 192.168.2.30: Log: DUMP: dumping (Pass V) [ACLs] Ndmpcopy: 192.168.2.30: Log: DUMP: 14857192 KB Ndmpcopy: 192.168.2.30: Log: DUMP: DUMP IS DONE Ndmpcopy: 192.168.2.30: Log: DUMP: Deleting "/vol/vol_xen_boot/../snapshot_for_backup.17" snapshot. Ndmpcopy: 192.168.2.40: Log: RESTORE: Fri Oct 5 23:44:52 2012: Restoring NT ACLs. Ndmpcopy: 192.168.2.40: Log: RESTORE: RESTORE IS DONE Ndmpcopy: 192.168.2.40: Log: RESTORE: The destination path is /vol/vol_xen_boot/ Ndmpcopy: 192.168.2.30: Notify: dump successful Ndmpcopy: 192.168.2.40: Notify: restore successful Ndmpcopy: Transfer successful [ 3 minutes 10 seconds ] Ndmpcopy: Done |

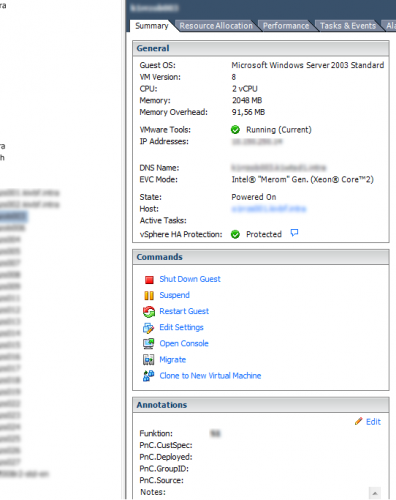

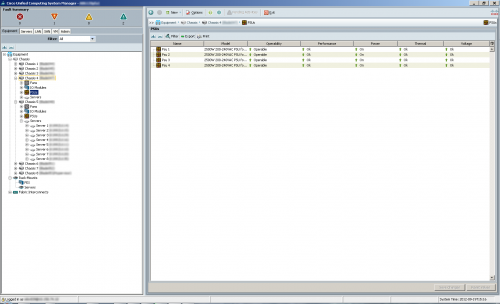

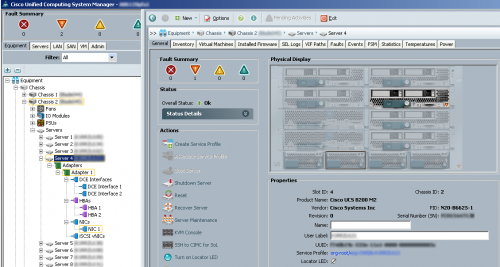

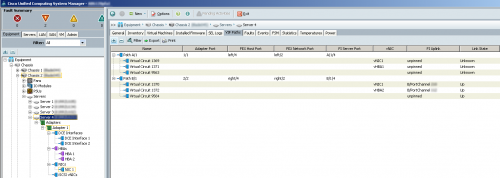

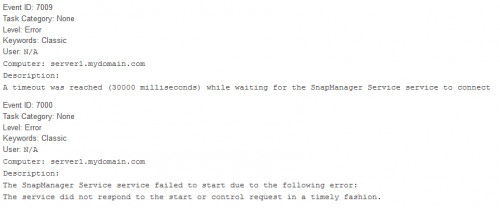

However, once I switched the UCS into the correct VSAN and modified the Boot Policy, the XenServer would boot, but didn’t find *any* Storage Repository. So I went ahead and looked at the CLI of the XenServer, looked at /var/log/messages and saw that apparently the PBD’s weren’t there yet (for whatever reason).

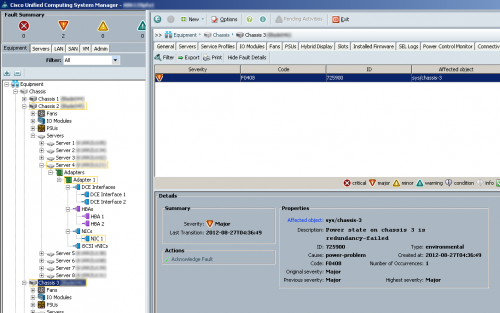

Poked around in /dev/disk/by-id, looked at the output of xe pbd-list and found that the SCSI-IDs used in the PBD’s we’re actually not present yet. So I was like *wtf* for a moment, however then took a quick peek at the output of lun show -v /vol/vol_xen_boot on both NetApp controllers and found the cause for my troubles:

|

|

FAS03> lun show -v /vol/vol_xen_boot/xen01_lun00 /vol/vol_xen_boot/xen01_lun00 15g (16106127360) (r/w, online, mapped) Comment: "xen01_lun00" Serial#: dnNtFoiCJH1c Share: none Space Reservation: disabled Multiprotocol Type: linux Maps: XEN01=0 Occupied Size: 3.3g (3571474432) Creation Time: Mon Feb 13 10:26:16 CET 2012 Cluster Shared Volume Information: 0x0 |

|

|

FAS01> lun show -v /vol/vol_xen_boot/xen01_lun00 /vol/vol_xen_boot/xen01_lun00 15g (16106127360) (r/w, online, mapped) Comment: "xen01_lun00" Serial#: dfb8c4mpKwd9 Share: none Space Reservation: disabled Multiprotocol Type: linux Maps: XEN01=0 Occupied Size: 3.3g (3571474432) Creation Time: Mon Feb 13 10:26:16 CET 2012 Cluster Shared Volume Information: 0x0 |

As you can see, the lun itself is available and mapped with the correct LUN ID. However, if you look closely at the serial of both LUNs you might notice what I noticed. So it turns out, ndmpcopy does the copy-process, however you need to adjust the LUN serial on the destination controller to match the one from the source controller, otherwise it’ll throw any system out of whack.

After adjusting that, everything came up just fine. And I’m finished with my first XenServer environment, only the big one is still copying.